The Causal Testing Framework 💻

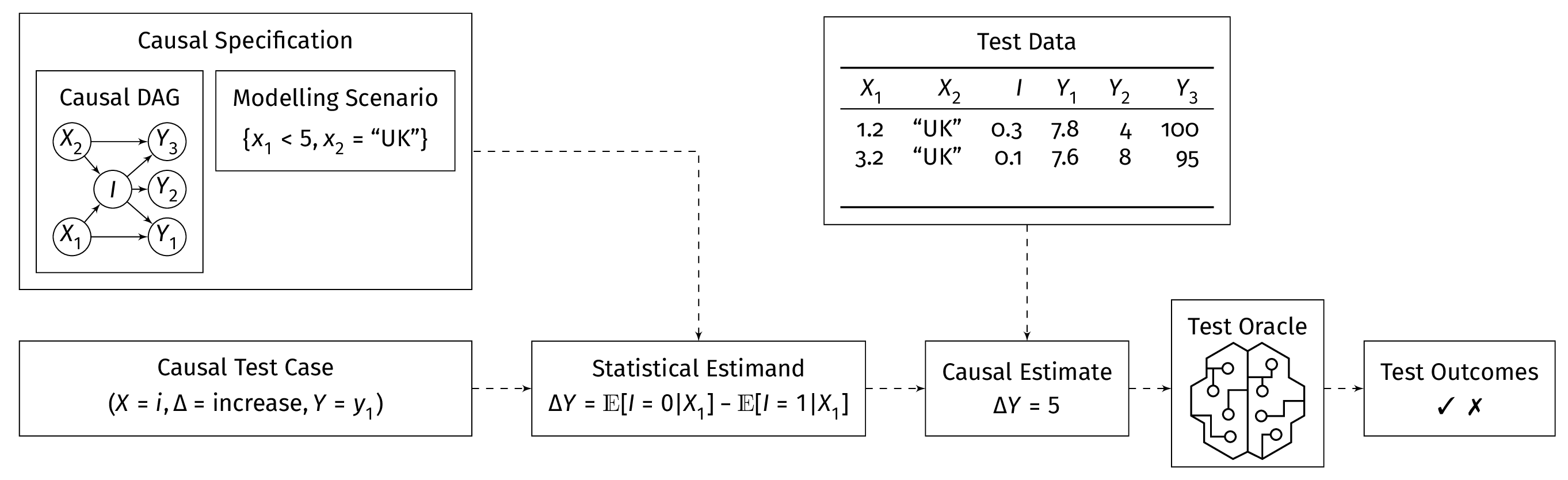

As part of my project work, I’m a research software engineer on the CITCOM project, led by Dr. Neil Walkinshaw, where I support the development and maintenance of the Causal Testing Framework; a causal inference-driven framework for functional black-box testing of complex software written in Python.

In simple terms, we’re solving the problem of how to reliably test complex computational models. Testing such models presents several key challenges, including:

- Lack of ground truth makes it difficult to know the “correct” answer.

- Large parameter spaces mean testing at scale can be computationally costly.

- Stochasticity of simulations can cause different outputs for the same input, making robust and reliable testing difficult to achieve.

To address these challenges, our framework employs the power of causal inference, drawing upon the tester’s subject-matter knowledge to better model the relationship between inputs and outputs compared to traditional methods. For more details on the theory behind the causal testing framework, check out the following papers:

- Metamorphic Testing with Causal Graphs

- Testing Causality in Scientific Modelling Software

- Causal Test Adequacy

For more practical information on our tool, we recently published a software paper in the Journal of Open Source Software, led by Dr. Michael Foster, that outlines the concept of causal testing at a high level. You can also find our codebase including examples and documentation on our GitHub repository.